Thomas D. Albright, Thomas M. Jessell, Eric R. Kandel, and Michael I. Posnerfull PDF,

full HTMLThe goal of neural science is to understand the biological mechanisms that account for mental activity. Neural science seeks to understand how the neural circuits that are assembled during development permit individuals to perceive the world around them, how they recall that perception from memory, and, once recalled, how they can act on the memory of that perception. Neural science also seeks to understand the biological underpinnings of our emotional life, how emotions color our thinking and how the regulation of emotion, thought, and action goes awry in diseases such as depression, mania, schizophrenia, and Alzheimer's disease. These are enormously complex problems, more complex than any we have confronted previously in other areas of biology.

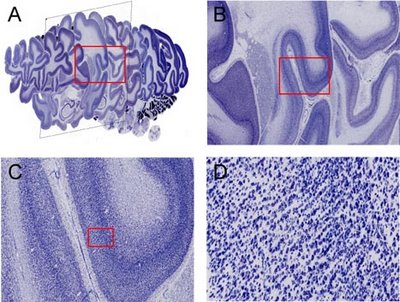

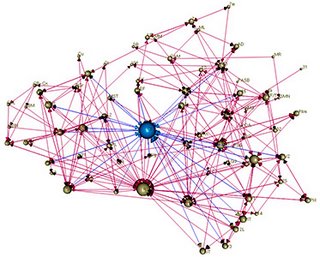

Historically, neural scientists have taken one of two approaches to these complex problems: reductionist or holistic. Reductionist, or bottom–up, approaches attempt to analyze the nervous system in terms of its elementary components, by examining one molecule, one cell, or one circuit at a time. These approaches have converged on the signaling properties of nerve cells and used the nerve cell as a vantage point for examining how neurons communicate with one another, and for determining how their patterns of interconnections are assembled during development and how they are modified by experience. Holistic, or top–down approaches, focus on mental functions in alert behaving human beings and in intact experimentally accessible animals and attempt to relate these behaviors to the higher-order features of large systems of neurons. Both approaches have limitations but both have had important successes.

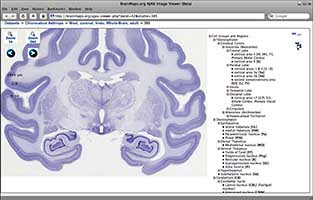

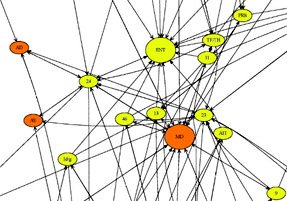

The holistic approach had its first success in the middle of the nineteenth century with the analysis of the behavioral consequences following selective lesions of the brain. Using this approach, clinical neurologists, led by the pioneering efforts of Paul Pierre Broca, discovered that different regions of the cerebral cortex of the human brain are not functionally equivalent ([271 and 266]). Lesions to different brain regions produce defects in distinctively different aspects of cognitive function. Some lesions interfere with comprehension of language, other with the expression of language; still other lesions interfere with the perception of visual motion or of shape, with the storage of long-term memories, or with voluntary action. In the largest sense, these studies revealed that all mental processes, no matter how complex, derive from the brain and that the key to understanding any given mental process resides in understanding how coordinated signaling in interconnected brain regions gives rise to behavior. Thus, one consequence of this top–down analysis has been initial demystification of aspects of mental function: of language perception, action, learning, and memory ( [164]).

A second consequence of the top–down approach came at the beginning of the twentieth century with the work of the Gestalt psychologists, the forerunners of cognitive psychologists. They made us realize that percepts, such as those which arise from viewing a visual scene, cannot simply be dissected into a set of independent sensory elements such as size, color, brightness, movement, and shape. Rather, the Gestaltists found that the whole of perception is more than the sum of its parts examined in isolation. How one perceives an aspect of an image, its shape or color, for example, is in part determined by the context in which that image is perceived. Thus, the Gestaltists made us appreciate that to understand perception we needed not only to understand the physical properties of the elements that are perceived, but more importantly, to understand how the brain reconstructs the external world in order to create a coherent and consistent internal representation of that world.

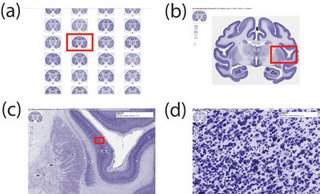

With the advent of brain imaging, the holistic methods available to the nineteenth century clinical neurologist, based mostly on the detailed study of neurological patients with defined brain lesions, were enhanced dramatically by the ability to examine cognitive functions in intact behaving normal human subjects ([243]). By combining modern cognitive psychology with high-resolution brain imaging, we are now entering an era when it may be possible to address directly the higher-order functions of the brain in normal subjects and to study in detail the nature of internal representations.

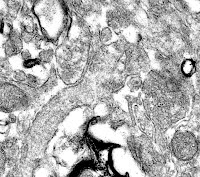

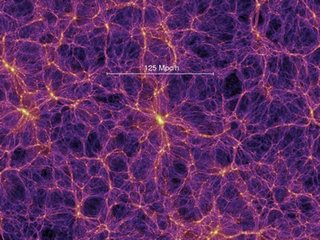

The success of the reductionist approach became fully evident only in the twentieth century with the analysis of the signaling systems of the brain. Through this approach, we have learned the molecular mechanisms through which individual nerve cells generate their characteristic long-range signals as all-or-none action potentials and how nerve cells communicate through specific connections by means of synaptic transmission. From these cellular studies, we have learned of the remarkable conservation of both the long-range and the synaptic signaling properties of neurons in various parts of the vertebrate brain, indeed in the nervous systems of all animals. What distinguishes one brain region from another and the brain of one species from the next, is not so much the signaling molecules of their constituent nerve cells, but the number of nerve cells and the way they are interconnected. We have also learned from studies of single cells how sensory stimuli are sorted out and transformed at various relays and how these relays contribute to perception. Much as predicted by the Gestalt psychologists, these cellular studies have shown us that the brain does not simply replicate the reality of the outside world, but begins at the very first stages of sensory transduction to abstract and restructure external reality.

In this review we outline the accomplishments and limitations of these two approaches in attempts to delineate the problems that still confront neural science. We first consider the major scientific insights that have helped delineate signaling in nerve cells and that have placed that signaling in the broader context of modern cell and molecular biology. We then go on to consider how nerve cells acquire their identity, how they send axons to specific targets, and how they form precise patterns of connectivity. We also examine the extension of reductionist approaches to the visual system in an attempt to understand how the neural circuitry of visual processing can account for elementary aspects of visual perception. Finally, we turn from reductionist to holistic approaches to mental function. In the process, we confront some of the enormous problems in the biology of mental functioning that remain elusive, problems in the biology of mental functioning that have remained completely mysterious. How does signaling activity in different regions of the visual system permit us to perceive discrete objects in the visual world? How do we recognize a face? How do we become aware of that perception? How do we reconstruct that face at will, in our imagination, at a later time and in the absence of ongoing visual input? What are the biological underpinnings of our acts of will?

As the discussions below attempt to make clear, the issue is no longer whether further progress can be made in understanding cognition in the twenty-first century. We clearly will be able to do so. Rather, the issue is whether we can succeed in developing new strategies for combining reductionist and holistic approaches in order to provide a meaningful bridge between molecular mechanism and mental processes: a true molecular biology of cognition. If this approach is successful in the twenty-first century, we may have a new, unified, and intellectually satisfying view of mental processes.